- Home

- Elon University Poll

- Elon Poll – May 15, 2024

Elon Poll – May 15, 2024

More than three-fourths of Americans fear abuses of artificial intelligence will affect the 2024 presidential election; many are not confident they can detect faked photos, videos or audio

Seventy-eight percent of American adults expect abuses of artificial intelligence systems (AIs) that will affect the outcome of the 2024 presidential election, according to a new national survey by the Elon Poll and the Imagining the Digital Future Center at Elon University. The survey finds:

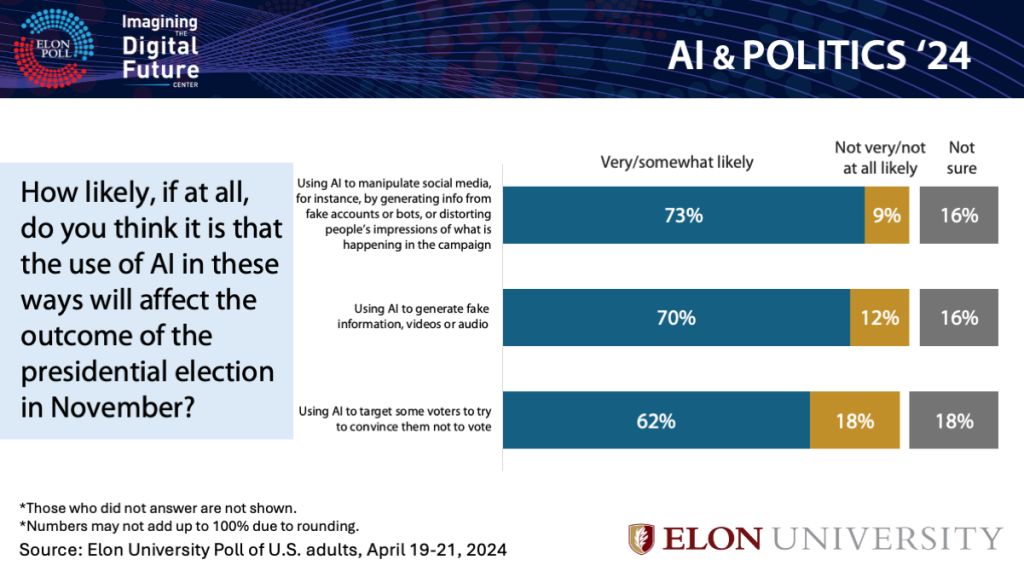

- 73% of Americans believe it is “very” or “somewhat” likely AI will be used to manipulate social media to influence the outcome of the presidential election – for example, by generating information from fake accounts or bots or distorting people’s impressions of the campaign.

- 70% say it is likely the election will be affected by the use of AI to generate fake information, video and audio material.

- 62% say the election is likely to be affected by the targeted use of AI to convince some voters not to vote.

- In all, 78% say at least one of these abuses of AI will affect the presidential election outcome. More than half think all three abuses are at least somewhat likely to occur.

This survey was done in collaboration with Elon’s Imagining the Digital Future Center, which works to discover and broadly share a diverse range of opinions, ideas and original research about the impact of digital change.

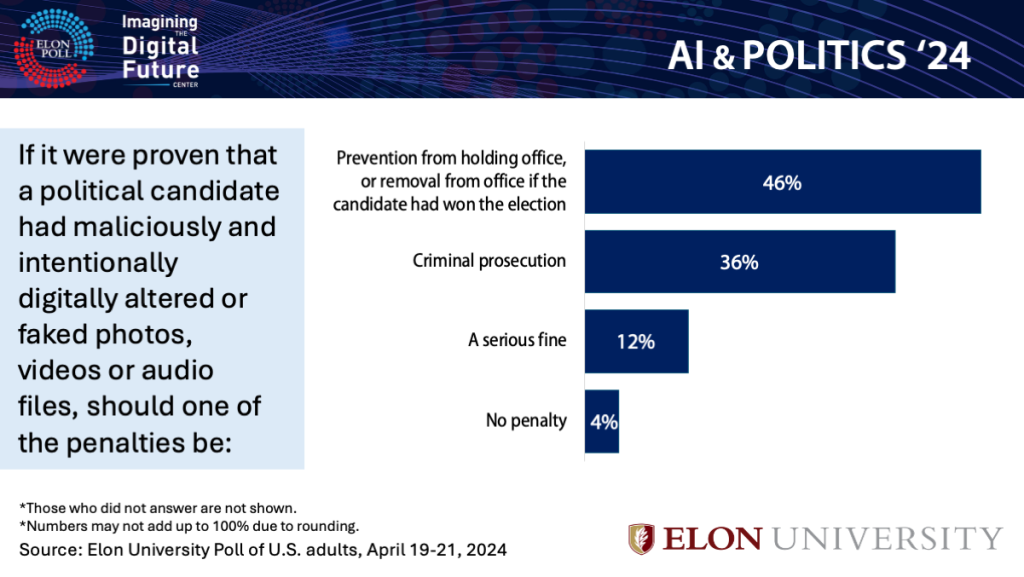

These concerns about AI and the election put Americans in a punishing frame of mind. Fully 93% think some penalty should be applied to candidates who maliciously and intentionally alter or fake photos, videos or audio files.

- 46% think the punishment should be removal from office.

- 36% say offenders should face criminal prosecution.

By a nearly 8-1 margin, more Americans feel the use of AI will hurt the coming election than help it: 39% say it will hurt and 5% think it will help. Some 37% say they are not sure.

Americans’ concerns about the use and impact of AI systems occur in a challenging and confusing news and information environment.

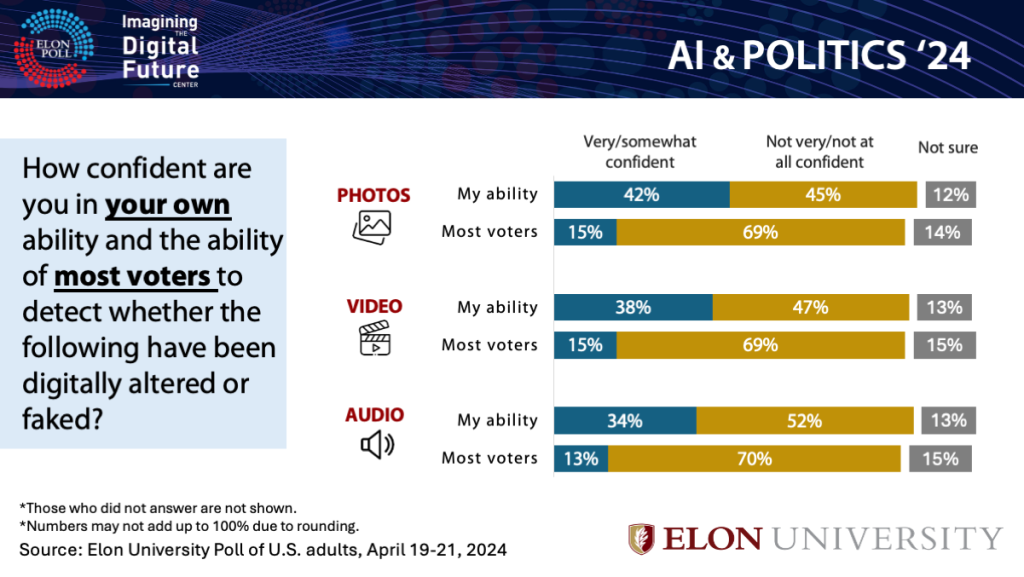

- 52% are not confident they can detect altered or faked audio material.

- 47% are not confident they can detect altered videos.

- 45% say they are not confident they can detect faked photos.

They have far less faith in the capacity of others to detect fakes: About 7-in-10 are not confident in most voters’ ability to detect photos, videos and audio that have been altered or faked.

“Misinformation in elections has been around since before the invention of computers, but many worry about the sophistication of AI technology in 2024 giving bad actors an accessible tool to spread misinformation at an unprecedented scale,” said Jason Husser, professor of political science and director of the Elon University Poll. “We know that most voters are aware of AI risks to the 2024 election. However, the behavioral implications of that awareness will remain unclear until we see the aftermath of AI-generated misinformation. An optimistic hope is that risk-aware voters may approach information in the 2024 cycle with heightened caution, leading them to become more sophisticated consumers of political information. A pessimistic outlook is that worries about AI-misinformation might translate into diminished feelings of self-efficacy, institutional trust and civic engagement.”

This survey finds that 23% of U.S. adults have used large language models (LLMs) or chatbots like ChatGPT, Gemini, or Claude. And it explores one of the main concerns related to these models: Are they mostly fair or mostly biased when they answer users’ questions related to politics and public policy-related issues?

Majorities of Democrats, Republicans and independents say they are not sure if these systems are fair or biased to different groups. At the same time, there are some notable differences among partisans who do have views. Republicans are generally more wary of AI models than Democrats are. For instance, Republicans are more likely to think AI systems are biased against their views. Surprisingly, they are also more likely than Democrats to think that AI systems are biased against the views of Democrats. Here are the findings:

- 36% of Republicans think AI systems are biased against Republicans, compared with 15% of Democrats who think that.

- 23% of Republicans also (or “paradoxically”) think AI systems are biased against Democrats, compared with 14% of Democrats who think that.

In addition, Republicans are more likely than Democrats to think AI systems are biased against men and White people. Democrats are somewhat less likely than Republicans to think those biases exist in AI systems.

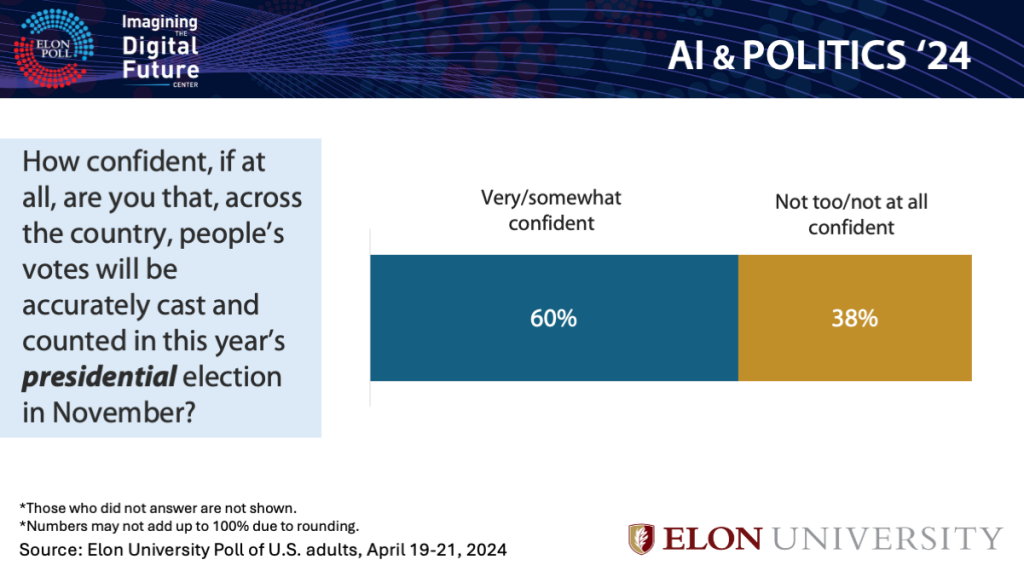

Some 60% of Americans say they are “very” or “somewhat” confident people’s votes will be accurately cast and counted. On this question, 83% of Democrats are confident, while 60% of Republicans are not confident.

“Voters think this election will unfold in an extraordinarily challenging news and information environment,” said Lee Rainie, Director of Elon University’s Imagining the Digital Future Center. “They anticipate that new kinds of misinformation, faked material and voter-manipulation tactics are being enabled by AI. What’s worse, many aren’t sure they can sort through the garbage they know will be polluting campaign-related content.

The survey reported here was conducted from April 19-21, 2024, among 1,020 U.S. adults. It has a margin of error of 3.2 percentage points.