Tech experts worry about the use of AI to optimize profits and social control

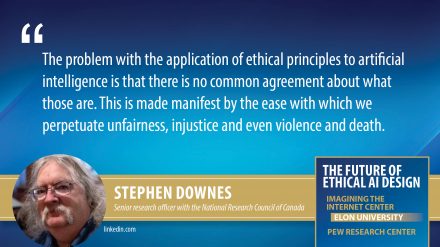

A majority of technology experts worries that the evolution of artificial intelligence by 2030 will continue to be primarily focused on optimizing profits and social control, according to a new report from the Pew Research Center and Elon University’s Imagining the Internet Center. These experts also cite the difficulty of achieving consensus about the ethical norms that should be encoded in the design of artificial intelligence systems. Many who expect progress say it is not likely within the next decade.

A majority of technology experts worries that the evolution of artificial intelligence by 2030 will continue to be primarily focused on optimizing profits and social control, according to a new report from the Pew Research Center and Elon University’s Imagining the Internet Center. These experts also cite the difficulty of achieving consensus about the ethical norms that should be encoded in the design of artificial intelligence systems. Many who expect progress say it is not likely within the next decade.

This report is part of a long-running series about the future of the internet and is based on a nonscientific canvassing that prompted responses from 602 technology innovators, developers, business and policy leaders, researchers and activists who were asked, “By 2030, will most of the AI systems being used by organizations of all sorts employ ethical principles focused primarily on the public good?” In response, 68% of the respondents chose the option declaring that ethical principles focused primarily on the public good will not be employed in most AI systems by 2030; 32% chose the option positing that ethical principles focused primarily on the public good will be employed in most AI systems by 2030.

The respondents whose insights are shared in this report raised a variety of issues as they explained their answers in an open-ended response format: How do you apply ethics to any situation? Is maximum freedom the ethical imperative or is maximum human safety? Should systems steer clear of activities that substantially impact human agency, allowing people to make decisions for themselves, or should they be set up to intervene when it seems clear that human decision-making may be harmful?

The respondents whose insights are shared in this report raised a variety of issues as they explained their answers in an open-ended response format: How do you apply ethics to any situation? Is maximum freedom the ethical imperative or is maximum human safety? Should systems steer clear of activities that substantially impact human agency, allowing people to make decisions for themselves, or should they be set up to intervene when it seems clear that human decision-making may be harmful?

They wrestled with the meaning of such grand concepts as beneficence, nonmaleficence, autonomy and justice when it comes to tech systems.

A share of these respondents began their comments on our question by arguing that the issue is not, “What do we want AI to be?” Instead, they noted the issue should be, “What kind of humans do we want to be? How do we want to evolve as a species?”

A share of these respondents began their comments on our question by arguing that the issue is not, “What do we want AI to be?” Instead, they noted the issue should be, “What kind of humans do we want to be? How do we want to evolve as a species?”

Lee Rainie, director of Pew’s Internet and Technology research and a co-author of this study, said, “In addition to expressing concerns over the impact of profit and power motives, these experts also noted that ethical behaviors are difficult to define, implement and enforce on a global scale.”

Janna Anderson, director of Elon’s Imagining the Internet Center, added, “Many said they find hope in the fact that there are a number of major initiatives now underway to address these emerging issues. Some pointed out that we are still in the earliest days of the digital revolution and said they hope that humanity will adjust and find approaches to advancing the evolution of AI in ways that will help build a positive future.”

Anderson and Rainie discussed the findings of the report in a webinar hosted by the Pew Research Center and data.org:

The experts’ responses, gathered in the summer of 2020, sounded many broad themes about the ways in which individuals and groups are accommodating to adjusting to AI systems. The themes are outlined below. The themes are followed by a small selection of expert quotes from the 127-page report.

The experts’ responses, gathered in the summer of 2020, sounded many broad themes about the ways in which individuals and groups are accommodating to adjusting to AI systems. The themes are outlined below. The themes are followed by a small selection of expert quotes from the 127-page report.

Worries: The main developers and deployers of AI are focused on profit-seeking and social control, and there is no consensus about what ethical AI would look like

Even as global attention turns to the purpose and impact of artificial intelligence (AI), many experts worry that ethical behaviors and outcomes are hard to define, implement and enforce. They point out that the AI ecosystem is dominated by competing businesses seeking to maximize profits and by governments seeking to surveil and control their populations.

- It is difficult to define “ethical” AI: Context matters. There are cultural differences, and the nature and power of the actors in any given scenario are crucial. Norms and standards are currently under discussion, but global consensus may not be likely. In addition, formal ethics training and emphasis is not embedded in the human systems creating AI.

- Control of AI is concentrated in the hands of powerful companies and governments driven by motives other than ethical concerns: Over the next decade, AI development will continue to be aimed at finding ever-more-sophisticated ways to exert influence over people’s emotions and beliefs in order to convince them to buy goods, services and ideas.

- The AI genie is already out of the bottle, abuses are already occurring, and some are not very visible and hard to remedy: AI applications are already at work in “black box” systems that are opaque at best and, at worst, impossible to dissect. How can ethical standards be applied under these conditions? While history has shown that when abuses arise as new tools are introduced societies always adjust and work to find remedies, this time it’s different. AI is a major threat.

- Global competition, especially between China and the U.S., will matter more to the development of AI than any ethical issues: There is an arms race between the two tech superpowers that overshadows concerns about ethics. Plus, the two countries define ethics in different ways. The acquisition of techno-power is the real impetus for advancing AI systems. Ethics takes a back seat.

Hopes: Progress is being made as AI spreads and shows its value; societies have always found ways to mitigate the problems arising from technological evolution

Artificial intelligence applications are already doing amazing things. Further breakthroughs will only add to this. The unstoppable rollout of new AI is inevitable, as is the development of harm-reducing strategies. AI systems themselves can be used to identify and fix problems arising from unethical systems. The high-level global focus on ethical AI in recent years has been productive and is moving society toward agreement around the idea that AI development should focus on beneficence, nonmaleficence, autonomy and justice.

- AI advances are inevitable; we will work on fostering ethical AI design: Imagine a future where even more applications emerge to help make people’s lives easier and safer. Health care breakthroughs are coming that will allow better diagnosis and treatment, some of which will emerge from personalized medicine that radically improves the human condition. All systems can be enhanced by AI; thus, it is likely that support for ethical AI will grow.

- A consensus around ethical AI is emerging and open-source solutions can help: There has been extensive study and discourse around ethical AI for several years, and it is bearing fruit. Many groups working on this are focusing on the already-established ethics of the biomedical community.

- Ethics will evolve and progress will come as different fields show the way: No technology endures if it broadly delivers unfair or unwanted outcomes. The market and legal systems will drive out the worst AI systems. Some fields will be faster to the mark in getting ethical AI rules and code in place, and they will point the way for laggards.

A Sampling of Expert Quotes in the Pew-Elon Report on the Future of Ethical AI Design

A Sampling of Expert Quotes in the Pew-Elon Report on the Future of Ethical AI Design

Ben Shneiderman, professor and founder of the Human Computer Interaction Lab, University of Maryland

“True change will come when corporate business choices are designed to limit the activity of malicious actors – criminals, political operatives, hate groups and terrorists – while increasing user privacy.”

Jonathan Grudin, principal researcher with the Natural Interaction Group at Microsoft Research

“The principal use of AI is likely to be finding ever more sophisticated ways to convince people to buy things that they don’t really need, leaving us deeper in debt with no money to contribute to efforts to combat climate change, environmental catastrophe, social injustice and inequality, and so on.”

danah boyd, founder and president of the Data & Society Research Institute and principal researcher at Microsoft

“[Artificial intelligence] systems are primarily being built within the context of late-stage capitalism, which fetishizes efficiency, scale and automation. A truly ethical stance on AI requires us to focus on augmentation, localized context and inclusion, three goals that are antithetical to the values justified by late-stage capitalism.

Ethan Zuckerman, director of the UMass Institute for Digital Public Infrastructure

“Activists and academics advocating for ethical uses of AI have been remarkably successful in having their concerns aired even as harms of misused AI are just becoming apparent. The campaigns to stop the use of facial recognition because of racial biases is a precursor of a larger set of conversations about serious ethical issues around AI.”

Douglas Rushkoff, author and professor of media at City University of New York

“Why should AI become the very first technology whose development is dictated by moral principles? We haven’t done it before, and I don’t see it happening now. Most basically, the reasons why I think AI won’t be developed ethically is because AI is being developed by companies looking to make money, not to improve the human condition.”

Abigail De Kosnik, director, Center for New Media, University of California-Berkeley

“AI that is geared toward generating revenue for corporations will nearly always work against the interests of society. I and other educators are introducing more ethics training into our instruction. I am hopeful, but I am not optimistic about our chances.”

Adam Clayton Powell III, USC Annenberg Center on Communication Leadership and Policy

“It is clear that governments, especially totalitarian governments in China, Russia, et seq., will want to control AI within their borders, and they will have the resources to succeed. Those governments are only interested in self-preservation, not ethics.”

Frank Kaufmann, president of Twelve Gates Foundation and Values in Knowledge Foundation

“The hope and opportunity to enhance, support and grow human freedom, dignity, creativity and compassion through AI systems excite me. The chance to enslave, oppress and exploit human beings through AI systems concerns me.”

Barry Chudakov, founder and principal of Sertain Research

“Many of our ethical frameworks have been built on dogmatic injunctions: thou shalt and shalt not…. Big data effectively reimagines ethical discourse…. For AI to be used in ethical ways, and to avoid questionable approaches, we must begin by reimagining ethics itself.”

Mike Godwin, former general counsel for the Wikimedia Foundation

“The most likely scenario [for ethical AI] is that some kind of public abuse of AI technologies will come to light, and this will trigger reactive limitations on the use of AI, which will either be blunt-instrument categorical restrictions on its use or (more likely) a patchwork of particular ethical limitations addressed to particular use cases, with unrestricted use occurring outside the margins of these limitations.”

Jamais Cascio, research fellow at the Institute for the Future

“What concerns me the most about the wider use of AI is the lack of general awareness that digital systems can only manage problems that can be put in a digital format. An AI can’t reliably or consistently handle a problem that can’t be quantified.”

Marcel Fafchamps, senior fellow, Center on Democracy, Development and the Rule of Law at Stanford University

“The fact that AI integrates ethical principles does not mean that it integrates ‘your’ preferred ethical principles. So, the question is not whether it integrates ethical principles, but which ethical principles it integrates.”

Stowe Boyd, consulting futurist expert in technological evolution and the future of work

“I have projected a social movement that would require careful application of AI as one of several major pillars. I’ve called this the Human Spring, conjecturing that a worldwide social movement will arise in 2023, demanding the right to work and related social justice issues, a massive effort to counter climate catastrophe, and efforts to control artificial intelligence. AI, judiciously used, can lead to breakthroughs in many areas. But widespread automation of many kinds of work – unless introduced gradually, and not as fast as profit-driven companies would like – could be economically destabilizing.”

Amy Webb, founder of the Future Today Institute

“When it comes to AI, we should pay close attention to China, which has talked openly about its plans for cyber sovereignty. But we should also remember that there are cells of rogue actors who could cripple our economies simply by mucking with the power or traffic grids, causing traffic spikes on the internet or locking us out of our connected home appliances.”

Susan Crawford, professor at Harvard Law School

“We have no basis on which to believe that the animal spirits of those designing digital processing services [AI], bent on scale and profitability, will be restrained by some internal memory of ethics, and we have no institutions that could impose those constraints externally.”

Gary A. Bolles, chair for the future of work at Singularity University

“I hope we will shift the mindset of engineers, product managers and marketers from ethics and human centricity as a tack-on after AI products are released, to a model that guarantees ethical development from inception. Everyone in the technology development food chain will have the tools and incentives to ensure the creation of ethical and beneficial AI-related technologies, so there is no additional effort required.”

David Brin, physicist, futures thinker and author of “Earth” and “Existence”

“If AIs are many and diverse and reciprocally competitive, then it will be in their individual interest to keep an eye on each other and report bad things, because doing so will be to their advantage. This depends on giving them a sense of separate individuality. It is a simple recourse, alas seldom even discussed.”

Michael G. Dyer, professor emeritus of computer science at UCLA expert in Natural Language Processing

“I am concerned, and also convinced that at some point within the next 300 years humanity will be replaced by its own creations, once they become sentient and more intelligent than ourselves. Computers are already smarter at many tasks, but they are not an existential threat to humanity (at this point) because they lack sentience…. AI systems are right now being evolved to survive (and learn) in simulated environments and such systems, if given language comprehension abilities … would then achieve a form of sentience.”

Esther Dyson, internet pioneer, journalist, entrepreneur and executive founder of Wellville

“The more we use AI to reveal … previously hidden patterns, the better for us all. A lot depends on society’s willingness to look at the truth and to act/make decisions accordingly. With luck, a culture of transparency will cause this to happen.”

Brad Templeton, internet pioneer, futurist, activist and chair emeritus of the Electronic Frontier Foundation

“When we start to worry about AI with agency – making decisions on its own – it is understandable why people worry about that. Unfortunately, relinquishment of AI development is not a choice. It just means the AIs of the future are built by others, which is to say your rivals. You can’t pick a world without AI; you can only pick a world where you have it or not.”

David Karger, professor at MIT’s Computer Science and Artificial Intelligence Laboratory

“In 2030, AI systems will continue to be machines that do what their human users tell them to do. So, the important question is whether their human users will employ ethical principles focused primarily on the public good. Since that isn’t true now, I don’t expect it will be true in 2030 either.”

Beth Noveck, director, NYU Governance Lab

“If we are to realize the positive benefits of AI, we first need to change the governance of AI and ensure that these technologies are designed in a more participatory fashion with input and oversight from diverse audiences, including those most affected by the technologies.”

Sam S. Adams, senior research scientist in artificial intelligence for RTI International

“The AI genie is completely out of the bottle already, and by 2030 there will be dramatic increases in the utility and universal access to advanced AI technology. This means there is practically no way to force ethical use in the fundamentally unethical fractions of global society. The multi-millennial problem with ethics has always been: Whose ethics? Who decides and then who agrees to comply? That is a fundamentally human problem that no technical advance or even existential threat will totally eliminate.”

Joël Colloc, professor of computer sciences at Le Havre University, Normandy

“Rabelais used to say, ‘Science without conscience is the ruin of the soul.’ Science provides powerful tools. When these tools are placed only in the hands of private interests, for the sole purpose of making profit and getting even more money and power, the use of science can lead to deviance.”

Paul Jones, professor emeritus of information science at the University of North Carolina – Chapel Hill

“For most of us, the day-to-day conveniences of AI by far outweigh the perceived dangers. Dangers will come on slow and then cascade before most of us notice.”

Dan S. Wallach, a professor in the systems group at Rice University’s Department of Computer Science

“Without a doubt, AI will do great things for us, whether it’s self-driving cars that significantly reduce automotive death and injury, or whether it is computers reading radiological scans and identifying tumors earlier in their development than any human radiologist might do reliably. But AI will also be used in horribly dystopian situations, such as China’s rollout of facial-recognition camera systems throughout certain western provinces in the country.”

Kathleen M. Carley, director of the Center for Computational Analysis of Social and Organizational Systems at Carnegie Mellon University

“What gives me the most hope is that most people, regardless of where they are from, want AI and technology in general to be used in more ethical ways. What worries me the most is that without a clear understanding of the ramifications of ethical principles, we will put in place guidelines and policies that will cripple the development of new technologies that would better serve humanity.”

The full report features a selection of the most comprehensive overarching responses shared by the 602 thought leaders participating in the nonrandom sample.

Read the full report as published by Elon University’s Imagining the Internet Center: https://www.elon.edu/u/imagining/surveys/xii-2021/ethical-ai-design-2030/