The assistant professor of communication design explored whether coaching users in prompting during their interaction with LLM-powered chatbots might impact users' perceptions and engagement with prompting, and further influence their trust calibration in the system.

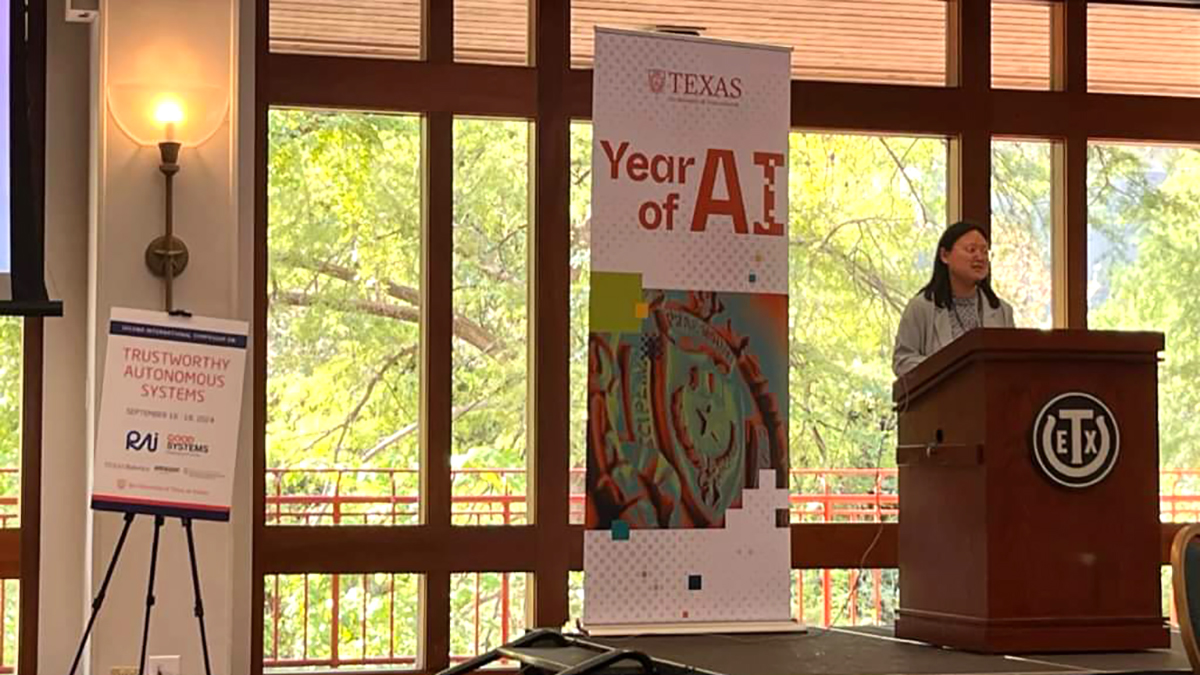

Cheng “Chris” Chen, a prolific researcher on subjects relating to psychology of communication technologies, such as social media, AI and generative AI, earned a best paper award this week at the Second International Symposium on Trustworthy Autonomous Systems (TAS ’24) in Austin, Texas, on the University of Texas campus.

The assistant professor of communication design was recognized for her collaborative project, examining the effects of prompt coaching on users’ perceptions, engagement and trust in the AI system. The article is titled “Is Your Prompt Detailed Enough? Exploring the Effects of Prompt Coaching on Users’ Perceptions, Engagement, and Trust in Text-to-Image Generative AI Tools.”

Chen published the paper with Eunchae Jang and S. Shyam Sundar of Penn State University and Sangwook Lee of the University of Colorado Boulder. Sundar also served as the symposium’s keynote speaker.

“Users had a strong need for prompt coaching when they interacted with text-to-image generative AI tools,” Chen said. “By providing assistance in helping users specifying their prompts, they appear to elaborate on their prompts, which further increased their ability to align their trust with the AI’s true trustworthiness, a process known as trust calibration.”

Chen presented a second paper at the symposium examining explanation timing, titled “When to Explain? Exploring the Effects of Explanation Timing on User Perceptions and Trust in AI Systems.” Chen co-authored the paper with Sundar, as well as Mengqi Liao of the University of Georgia.

According to the TAS ’24 program, the event featured 25 accepted papers presented as talks, focusing on the use of autonomous systems in transportation, healthcare, sustainability, robotics, law enforcement, defense and surveillance.

In recent months, Chen has published and presented research examining how to communicate algorithmic bias through training data; how patients view their interactions with individualizing AI doctors; and how users perceive autoplay features on video platforms. In July, she made an in-person presentation at the 2024 International Communication Association Conference in Gold Coast, Australia.